Best Practices Guide for Multi-Account AWS Deployments

One of the central pillars of abstraction for managing applications built on AWS is the AWS account itself.

When building your first application, you often start with one AWS account. There is no guide built into AWS that suggests otherwise, and you can happily build, test, and deploy a version of your application without thinking much of it. But everything changes once you need to support teams of developers working on many applications.

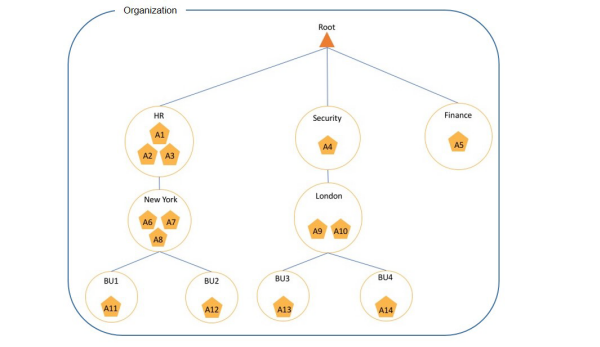

AWS accounts can be hierarchically related inside an AWS Organization. From the root account you can identify branches of accounts that fall under different cost centers and organizational controls. Here’s an example, from this AWS doc on account structures, of what an organization of AWS accounts might look like:

Here 14 different AWS accounts are used within one company. The separate accounts reflect the organization of this company.

Having hierarchical AWS accounts solves many business operations concerns, including cost allocation and separation of concerns. But it also addresses the challenges of application development and delivery.

The first key concern is limiting who has access to production environments. While the fine-grained access controls provided by AWS IAM make it possible to separate access within AWS accounts, it is easier to reason through separation of access across accounts. For example, a development AWS account can be provided to a team of developers who are granted permissions to manage resources within the account. A separate production account can be locked down such that only the necessary people can access production resources.

Second, AWS limits are governed at the account level. By default, you can only invoke 1,000 Lambda functions at the same time within an AWS account. If you mix production and development resources in the same AWS account, then a runaway Lambda recursive invocation in development could exhaust all the available concurrent invocations and break the production infrastructure.

An organization of hierarchical AWS accounts is a key best practice. However, with this additional level of abstraction comes additional challenges in developing and deployment applications.

This guide will give you key strategies for deploying the same application across multiple AWS accounts.

Infrastructure-as-Code is critical

Replicating all the resources necessary to run applications requires automated infrastructure deployment techniques. While deployments could be automated by scripts and other bespoke tooling, advancements in the past few years have shown Infrastructure-as-Code as the most productive way to describe and deploy applications. The biggest player in infrastructure-as-Code is AWS CloudFormation, which describes all your resources and the connections between them. You write a single template that defines the application infrastructure and it deploys them in a repeatable fashion.

If you’re struggling to master the AWS CloudFormation template format, a tool like Stackery’s visual editor can be helpful, creating that template based on a visual editor.

There’s even a Visual Studio Code plugin from Stackery that offers a visual editor for your CloudFormation templates.

So if everything is working as it should, you now have your application code and your infrastructure template. Having a template means you can replicate the application without manual steps. This reduces errors and increases the speed of deployments.

This is also why it’s a good idea to store your infrastructure template in the same code repository as your application code. Keeping everything in one place makes it easier to repeat the process when deploying across accounts.

Parameterizing deployments

With your infrastructure-as-code and application code in one nice repository, you’re ready to deploy your app to multiple accounts. Most applications need a way to differentiate between dev and prod environments. This requires devising a strategy for parameterization. AWS makes it easy to parameterize deployments by using AWS Systems Manager Parameter Store and AWS Secrets Manager to run those environments.

To use CloudFormation with parameters, you can take advantage of the built-in integration with AWS Systems Manager Parameter Store. There’s a great post detailing this process on the AWS Blog.

Stackery’s environment management can be very effective here, as it allows you to group parameters in a namespaced environment, which makes managing parameters and secrets easy.

Manage deployments on a per-account basis

It can be tempting to try to centralize deploys into a single account, some sort of “core deploy” system where one account has access to the others and is the only place code is released. Unfortunately, this doesn’t scale with organization size because it centralizes processes. As you grow, you’ll want to give people access to deploy to some environments and not others. It’s best, therefore, to manage deploys inside the accounts you are deploying into.

The biggest hurdle to this strategy is how your team moves between these accounts on a day-to-day basis. AWS SSO is a great solution to provide developers with access to multiple AWS accounts with just the right level of permissions for their role.

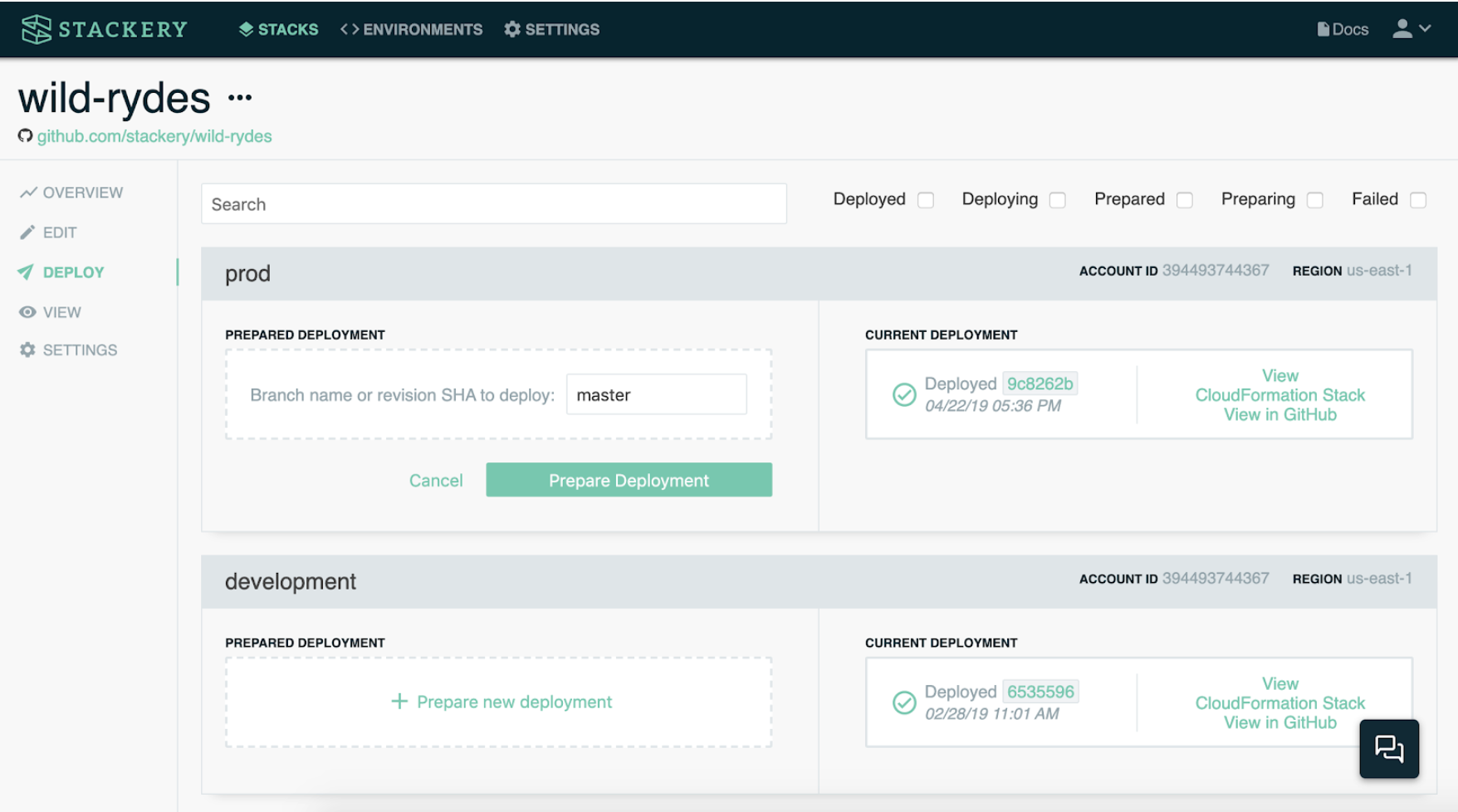

As an example, when you link multiple AWS accounts to Stackery, each AWS account is set up with its own build infrastructure (an AWS CodeBuild project, AWS CloudFormation stacks, etc.). This architecture enables Stackery’s central service to prepare deployments inside each user’s AWS account, then ask the user to use their AWS credentials (manually or via automation) to execute the changes.

Stackery’s Deploy dashboard provides controls and a single-pane view of what has been deployed into which AWS account and region

Automation is the final step

Once you have deployed your code using the necessary parameters you will be faced with one last issue: it’s somewhat tedious to have human engineers logging into these accounts to deploy your applications.

A better strategy would be to make use of some kind of automation. But how do you ensure that this automation has the necessary permissions?

This is where you need to set up the kind of roles that can become part of the deployment mechanism. For example, if you’re using AWS CodeBuild jobs, they must be able to assume an IAM role to deploy all the resources for your application. It’s recommended to provide a minimum set of permissions required for managing resources.

For example, you can configure an AWS CodeBuild job to execute a deployment that provisions AWS Lambda Functions using the Stackery CLI by providing it a role that includes permissions to execute CloudFormation Change Sets and provision resources in the template. This is an example AWS IAM policy with the permissions necessary to manage Lambda Functions:

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": [

"cloudformation:ExecuteChangeSet",

"lambda:AddPermission",

"lambda:CreateAlias",

"lambda:CreateEventSourceMapping",

"lambda:CreateFunction",

"lambda:DeleteAlias",

"lambda:DeleteEventSourceMapping",

"lambda:DeleteFunction",

"lambda:DeleteFunctionConcurrency",

"lambda:GetAlias",

"lambda:GetEventSourceMapping",

"lambda:GetFunction",

"lambda:GetFunctionConfiguration",

"lambda:ListAliases",

"lambda:ListEventSourceMappings",

"lambda:ListFunctions",

"lambda:ListTags",

"lambda:ListVersionsByFunction",

"lambda:PublishVersion",

"lambda:PutFunctionConcurrency",

"lambda:TagResource",

"lambda:UntagResource",

"lambda:UpdateAlias",

"lambda:UpdateEventSourceMapping",

"lambda:UpdateFunctionCode",

"lambda:UpdateFunctionConfiguration"

],

"Resource": "*"

}

]

}

Then, inside the CodeBuild job you have a BuildSpec that asks Stackery to deploy the stack:

version: 0.2

phases:

install:

runtime-versions:

nodejs: 10

commands:

- curl -Ls https://ga.cli.stackery.io/linux.zip > stackery.zip

- unzip stackery.zip

post_build:

commands:

- stackery deploy --stack-name functions --env-name staging --execute-changeset $CHANGE_SET_ARN

When the CodeBuild job is executed, you pass in the CloudFormation Change Set ARN of the prepared deployment and Stackery will do the rest using the permissions granted to the CodeBuild project.

A good multi-account strategy grows with you

The requirements of a large-scale multi-account architecture aren’t all practicable if your application is still a prototype. If you’re only releasing once a week, it’s perfectly reasonable to manually deploy each release to dev and prod, in turn, using the AWS CLI.

As you grow you’ll want to adopt the key pieces mentioned here:

- Infrastructure-as-Code

- Parameterization tooling

- Automation of releases

With these strategy points in place, your team should have the sandbox they need to experiment, and the production-stability you need to deliver high-performing applications.

Related posts

And how Stackery can help you put it into practice