The '8 Fallacies of Distributed Computing' Aren't Fallacies Anymore

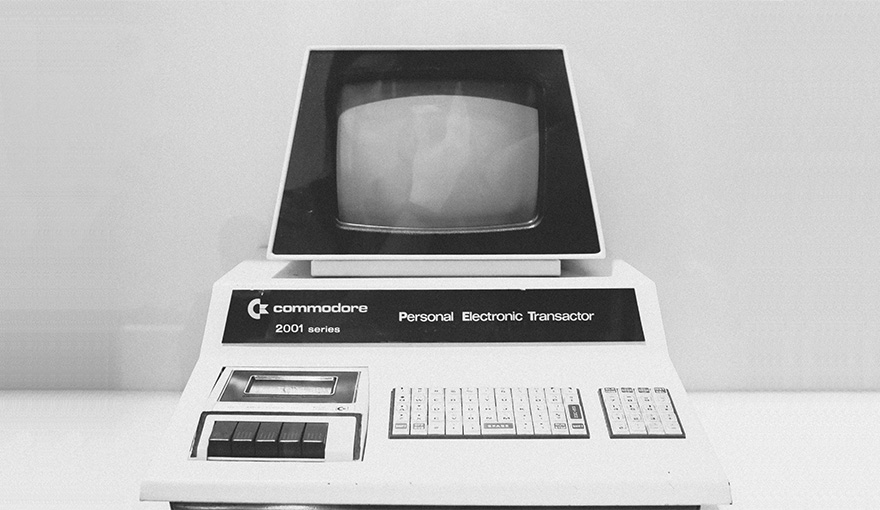

In the mid 90s, centralized 'mainframe' systems were in direct competition with microcomputing for dominance of the technology marketplace and developers' time. Peter Deutsch, a Sun Microsystems engineer who was a 'thought leader' before we had the term, wrote seven fallacies that many developers assumed about distributed computing, to which James Gosling added one more to make the famous list of The 8 Fallacies of Distributed Computing.

- The network is reliable

- Latency is zero

- Bandwidth is infinite

- The network is secure

- Topology doesn't change

- There is one administrator

- Transport cost is zero

- The network is homogeneous

Microcomputing would win that debate in the 90's, with development shifting to languages and platforms that could be run on a single desktop machine. Twenty years later we're still seeing these arguments used against distributed computing, especially against the most rarefied version of distributed computing, serverless. Recently, more than one person has replied to these fallacies by saying they 'no longer apply' or 'aren't as critical' as they once were, but the truth is none of these are fallacies any more.

How can these fallacies be true? There's still latency and network vulnerabilities.

Before about 2000, the implied comparison with local computing didn't need to be stated: "The network is more reliable than your local machine..." was obviously a fallacy. But now that assumption is the one under examination. Networks still have latency, but the superiority of local machines over networks is now either in doubt or absent.

1. The Network is Reliable

This is a pretty good example of how the list of fallacies 'begs the question.' What qualifies as 'reliable?' Now that we have realistic stats about hard drive failure rates, even a RAID-compliant local cluster has some failure rate.

2. Latency is Zero

Latency is how much time it takes for data to move from one place to another (versus bandwidth, which is how much data can be transferred during that time). The reference here is to mainframe 'thin client' systems where every few keystrokes had to round-trip to a server over an unreliable, laggy network.

While our networking technology is a lot better, another major change ha been effective AJAX and async tools that check in when needed and show responsive interfaces all the time. On top of a network request structure that hasn't been much updated since the 90's, and a browser whose memory needs seem to double annually, we still manage to run cloud IDE's that perform pretty well.

3. Bandwidth is Infinite

Bandwidth still costs something, but beyond the network improvements mentioned above, the cost of bandwidth has become extremely tiny. While bills for bandwidth do exist, and I've even seen some teams optimize to try and save on their bandwidth costs!

In general this costs way more in developer wages than it saves, and brings us to the key point. Bandwidth still costs money, but the limited resource is not technology but people. You can buy a better network connection at 2AM on a Saturday, but you cannot hire a SQL expert who can halve your number of DB queries.

4. The Network is Secure

As Bloomberg struggles to back up its reports of a massive hardware bugging attack against server hardware, many people want to return to a time when network's were inherently untrustworthy. More accurately, since few developers can do their jobs without constant network access for at least Github and NPM, untrustworthy networks are an easy scapegoat for poor operational security practices that almost everyone commits.

The Dimmie attack which peaked in 2017 targeted the actual spot where most developers are vulnerable: their laptops. With enough access to load in-memory modules on your home machine, attackers can corrupt your code repos with malicious content. In a well-run dev shop it's the private computing resources that tend to be entry points for malicious attacks. The laptops that we take home with us for personal use that should be the least trustworthy component.

5. Topology Doesn't Change

With the virtualization options available in something serverless like AWS's Relational Database Service (RDS), it's likely that topology never has to change from the point of view of the client. On a local or highly controlled environment there are setups where no DB architectures, interfaces, or request structures have changed in years. This is called 'Massive Tech Debt'.

6. There is One Administrator

If this isn't totally irrelevant (no one works on code that has a single human trust source anymore, or if they do that's... real bad get a second Github admin please), it might still be a reason to use serverless and not roll your own network config.

For people just dipping a toe in to managed services, there are still horror stories about the one single AWS admin leaving for 6 weeks of vacation, deciding to join a monastery, and leaving the dev team unable to make changes. In those situations where there wasn't much consideration of the 'bus factor' on your team there still is just one administrator it's the service provider and as long as you're the one paying for the service you can wrest back control.

7. Transport Cost is Zero

Yes, transport cost is zero. This one is just out of date.

8. The Network is Homogeneous

Early networked systems had real issues with this, I am reminded of the college that reported they could only send emails to places within 500 miles, there are 'special places' in a network that can confound your tests and your general understanding of the network.

This fallacy isn't so much true as now the awkward parts of a network are clearly labelled as such. CI/CD is explicitly testing in a controlled environment, and even AWS which does its darndest to present you with a smooth homogenous system intentionally makes you aware of geographic zones.

Conclusions

We've all seen people on Twitter pointing out an AWS outage shouting how this means we should 'not trust someone else's computer' but I've never seen an AWS-hosted service have 1/10th the outages of a self-hosted services. Next time someone shares a report of a 2 hour outage in a single Asian AWS region, ask to see their red team logs from the last 6 months.

At Stackery, we have made it our mission to make modern cloud infrastructure as accessible and useful as possible. Get your engineering team the best solution for building, managing and scaling serverless applications with Stackery today.

Related posts