Think Twice Before Decomposing Your APIs For Serverless

Even though AWS Lambda was first released two and a half years ago, serverless tech is still new. Everyone is still trying to sort out how to build applications with it. Best practices are in their infancy. One technique that has been en vogue is decomposing REST apis down to a one-to-one mapping of endpoints and serverless functions. But is this the best way to build an api service?

Even though AWS Lambda was first released two and a half years ago, serverless tech is still new. Everyone is still trying to sort out how to build applications with it. Best practices are in their infancy. One technique that has been en vogue is decomposing REST apis down to a one-to-one mapping of endpoints and serverless functions. But is this the best way to build an api service?

The Short History Of Serverless Apis

From the moment AWS API Gateway was released it seemed everyone began building apis with independent endpoint functions. There are benefits to this approach, mostly around decoupling development among truly independent apis and their versions. But I've been left wondering why practically everyone started building serverless apis with independent endpoint functions. The closest rationale I have found is buried in this blog post: Let serverless solve the technology problems you don’t have. It mentions how serverless can help you avoid building monolithic architectures because you aren't required to serve all routes for an api from the same code base. This is an important benefit of serverless, but it doesn't necessitate you to break down all endpoints so they are backed by independent functions. Without a compelling rationale for independent endpoint functions I'm left to wonder if this paradigm sprang forth as an unintended consequence of the tools at hand. In the vein of "if all you have is a hammer, everything looks like a nail," I have a theory that everyone has become accustomed to api decomposition by default because of early limitations of AWS API Gateway. AWS API Gateway has evolved substantially since its initial release two years ago. In its first iteration it lacked an ability to proxy more than one route at a time to a given function. You could back all your individually specified routes with the same AWS Lambda function, but it felt as though API Gateway was suggesting each endpoint should have different backing functions. Thankfully, API Gateway introduced proxy resources last fall to enable a subset or even all routes of an api to be handled by the same API Gateway resource backed by a single AWS Lambda function. With this change we could finally think of serverless as an efficient compute platform for apis regardless of how you architect their implementations.

What's Wrong With Decomposed Apis?

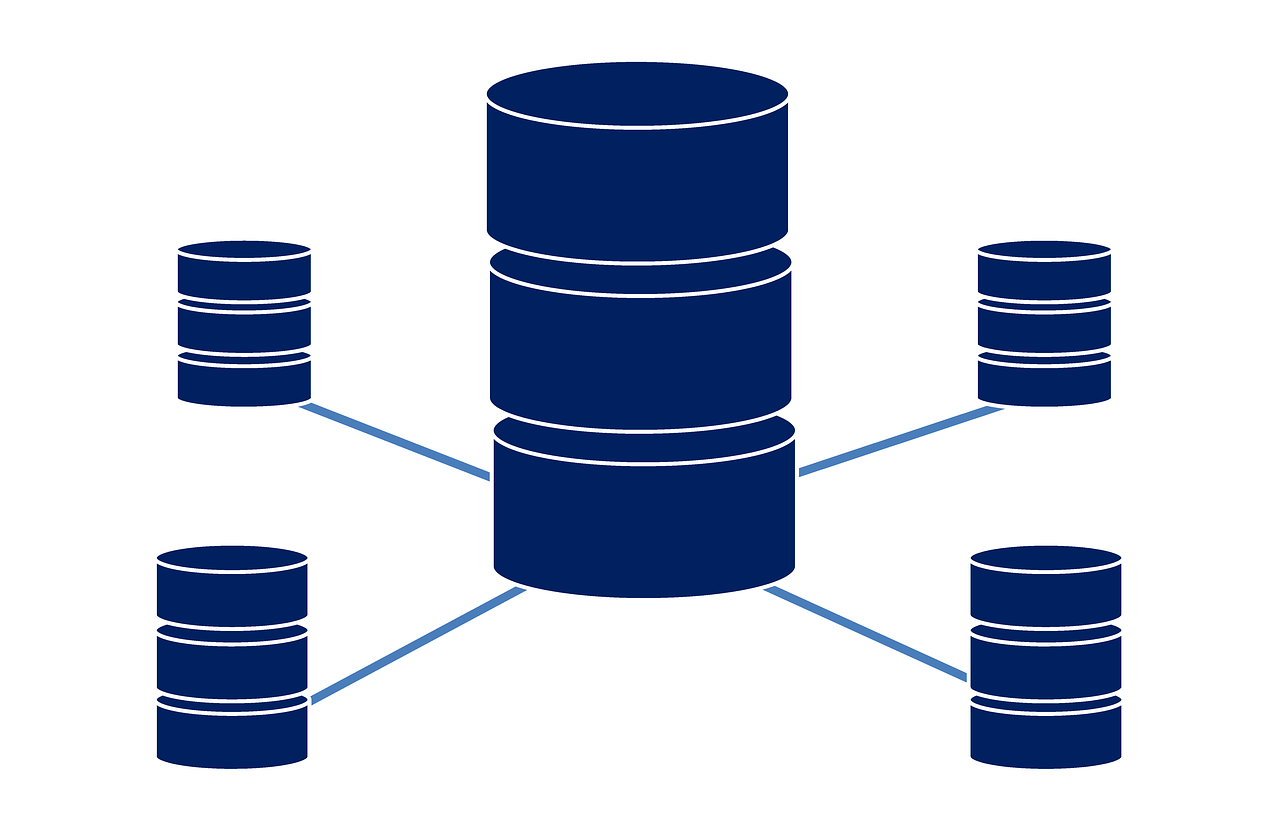

You may be asking yourself right now what the big deal is. Why does it matter if my api is built from many independent serverless functions or a single "monolithic" function? It turns out there are many non-obvious challenges when running a decomposed api that become moot when running a "monolithic" serverless api. The most operationally impactful challenge with a decomposed API is cold starts. After a serverless function is invoked its runtime is cached for a period of time. If that same function is invoked while the runtime is cached the function does not need to be initialized again and has a much lower latency when responding to requests. A "cold start" refers to a function invocation where the runtime is created and initialized from scratch rather than from a cached runtime and thus has a higher latency. The best way to prevent cold starts today is to keep a certain number of function instances "warm". This is easily accomplished by "pinging" the function a bunch of times in parallel every so often. What makes this more complicated, however, is if you have a different serverless function for every endpoint of your api. Keeping each endpoint's function warm then requires a much larger number of "pings". It's much simpler to keep one function warm than it is to keep many functions warm. It is also easier to monitor cold starts and overall latency of one function than it is for many functions. The other challenge with decomposing apis is managing basic REST api functionality. There are so many REST frameworks out there that already handle routing, path and query string parameters, cookies, and essential security concerns. These frameworks were architected under the assumption that they would handle routing and handling for multiple endpoints. Rather than reinventing the wheel by writing new frameworks for single-endpoint functions, why not use existing tried-and-true frameworks? As an example, check out our blog post on how you can serve a complete api service using one serverless function and the Hapi framework. Lastly, decomposing functionality requires architecting solutions to sharing common functionality. If you need a cookie parser for many endpoints, you will need to find a way to share the code among those endpoint serverless functions. In one sense this is a solved problem. You can break common code out into dependencies like individual Node.js packages and then include the dependencies in all the endpoint functions. But this is a solution to a problem (decomposed apis) that you wouldn't have to address in the first place if you were building a "monolithic" api function.

Architect Your Application Based On Its Needs

The underlying point I want to get across is you should architect your application based on its needs. There are times when you want independent api implementations. A good example is when you are building a new version of an existing api on the same domain, but you want to write the new version on a clean code base decoupled from the original implementation. This is a perfect scenario for using different serverless functions to back the different api versions. But don't feel pressured to rearchitect your entire api to fit into the new serverless model.