The Serverless Learning Curve

Like all new technologies, there's a lot of hype around FaaS-style "serverless" architectures. You may have heard of the many benefits of serverless: faster time to market, lower infrastructure costs, and impressive scalability. While all true (and enormously advantageous), it's important to identify some of the challenges of this new infrastructure approach.

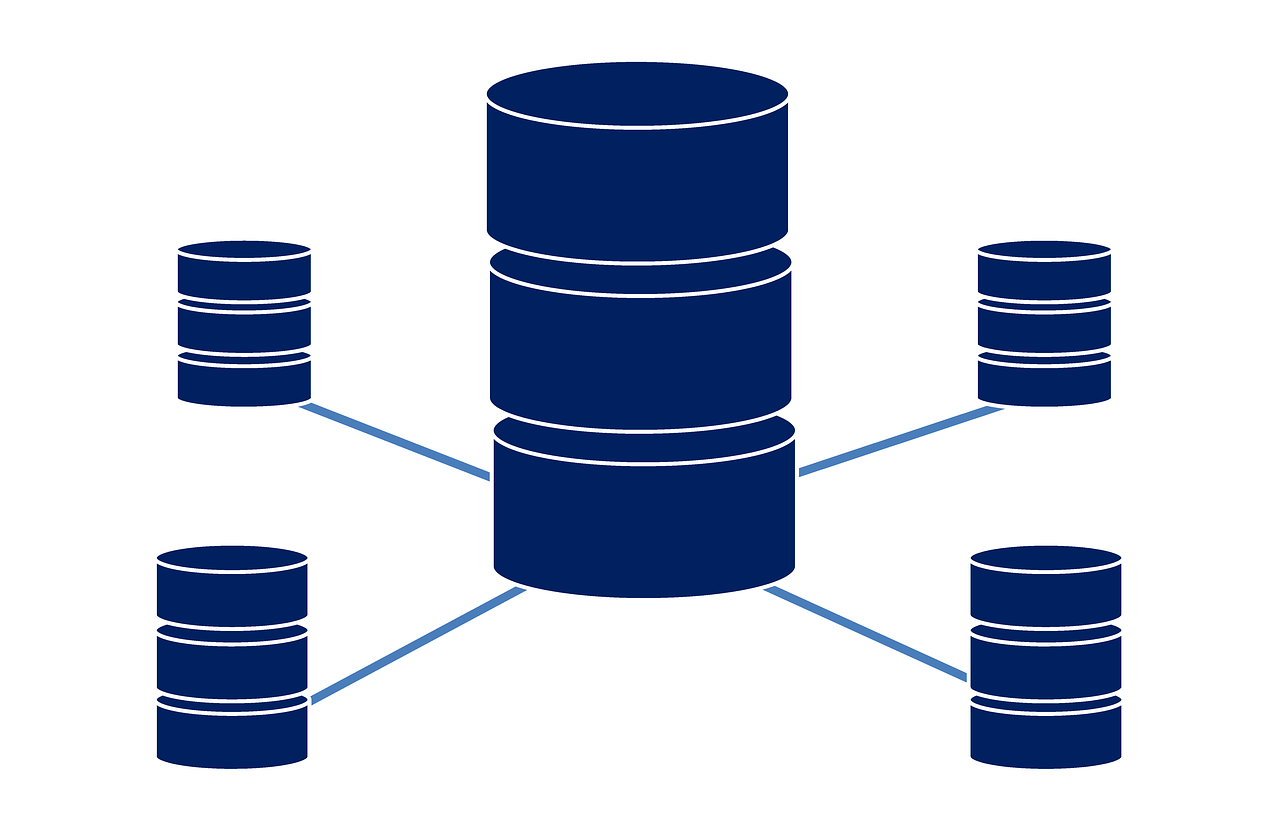

Deployment Automation

Most modern software companies have some variation of an automated release pipeline in which the application can be built, tested, distributed, configured, and released with minimal (or even no) manual intervention. Unfortunately, most legacy deployment automation tools simply aren't capable of handling the new FaaS paradigm. With FaaS, your deployment frequently touches many application tiers including networking, data storage, event streams, and (of course) compute functions. Dependencies need to be precompiled and shipped separately from your functions, and complex deployment models including promoting through progressive environments (dev -> staging -> prod). Be prepared to evaluate new tooling like Stackery for your release pipeline.

Environment and Secret Management

Similarly, environment and secret management may have some subtle but important differences with serverless. If you plan to provide environment variables and secrets to your application at runtime (to keep them out of your code base), you'll need to consider how to enable your application to look up these variables at runtime without relying on a local config file. Stackery provides environment specific key-value stores to keep your code base secure, while providing environment-aware variables at runtime.

Application Monitoring

If you're used to relying on tools like New Relic or AppDynamics to monitor your production applications, you might need to look elsewhere for your serverless solutions. These products were not designed for FaaS architecture and don't currently support modern serverless applications. Because serverless comes with some unique challenges, like monitoring for cold-boot functions and catching timeouts, you'll probably want to look for an equivalent, serverless-focused tool. Stackery automatically provides logging and error-handling (including for timeouts), but you may also be interested in a more robust APM solution like IOPipe.

Local Development, Testing, and Debugging

Serverless architecture provides some new challenges with regards to the development cycle. Generally speaking, it's very difficult (if not outright impossible) to develop entirely locally and typically requires provisioning an AWS (or alternate infrastructure provider) account for each developer to deploy into as part of their development workflow. Integration testing is particularly troublesome, and especially important, since FaaS-style applications are generally event-driven and need to be integrated with and tested alongside other service tiers. Debugging serverless applications, running remotely and without sophisticated testing solutions, can be tricky, and we haven't seen any great solutions for this on the market yet.

Overall, serverless architectures have some tremendous advantages and companies embracing serverless are experiencing a lot of early success. That said, the challenges we've highlighted are ones you'll want to bear in mind as you start to embrace serverless in your organization.