Dealing With the AWS Lambda Invocation Payload Limits

If you've dealt with lambda functions you may have run across the RequestEntityTooLargeException - * byte payload is too large for the Event invocation type (limit 131072 bytes) AWS Lambda exception that occurs when a function is invoked with too large of a payload. Current AWS Lambda limits are set at 6 MB for synchronous/RequestResponse invocations, and 128 K for asynchronous/Event invocations. I have the feeling that most people don't give a lot of thought to these invocation limits until something starts failing.

While rare, there are certain scenarios that are more likely to hit these limits - such as invoking an async function from a sync function or dealing with larger than expected results from a DB query. Depending on the situation, there are many ways to deal with this issue.

The easiest solution is to, prior to the function invocation, check the message length and then drop/clip it if it's too large. This is really only viable if the message is a non-critical in the invocation or if all the critical elements are always at the beginning. While somewhat brittle, this is relatively easy to implement as the code change is isolated to invoking function.

A more robust solution would be do use an Object Store or S3 bucket as your message repository and just pass the message key to the invoked function. This will make sure you never run into this invocation limit but will require changes in both the invoking and invoked functions while also adding latency to every function call as you will need to both store and fetch the message. Deletions can be handled via Object Expiration so as to avoid incurring even more latency.

The third solution is a hybrid solution, which has the same robustness and code impact as using an Object Store, but only incurs the latency penalties if the messages are actually over the limits. Using two wrapper functions, let's call them hydrate and dehydrate, messages are 'dehydrated' before invoking a function, and 'rehydrated' inside the invoked function prior to consumption.

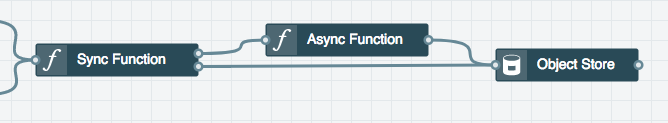

For example, in the above Stackery stack, I have Sync Function invoking asynchronously Async Function. I've added a port to both functions to a shared Object Store that will be used as the message store if needed. My two wrapper functions are as defined:

function dehydrate (message) {

if (JSON.stringify(message).length > 131071) {

console.log('Dehydrating message');

let key = `${Date.now()}-${Math.floor(Math.random() * 100)}`;

let params = {

action: 'put',

key: key,

body: JSON.stringify(message)

};

return stackery.output(params, { port: 1 })

.then(() => {

return { _messageKey: key };

});

} else {

return Promise.resolve(message);

}

}

function hydrate (message) {

if (typeof message === 'object' && Object.keys(message).length === 1 && '_messageKey' in message) {

console.log(`Hydrating message`);

let params = {

action: 'get',

key: message._messageKey

};

return stackery.output(params)

.then((response) => {

return JSON.parse(response[0].body.toString());

});

} else {

return Promise.resolve(message);

}

}

Note that dehydrate is currently written only with the asynchronous limit in mind, but could easily be expanded to deal with both. Sync Function now needs to invoke dehydrate and would look like this:

const stackery = require('stackery');

module.exports = function handler (message) {

// Do Stuff

return dehydrate({ message: message.message })

.then((dehydratedMessage) => {

return stackery.output(dehydratedMessage, { waitFor: 'TRANSMISSION' });

})

.then(() => {

return response;

})

.catch((error) => {

console.log(`Sync Function: Error: ${error}`);

});

};

And likewise, Async Function needs to hydrate the message before consuming it:

const stackery = require('stackery');

module.exports = function handler (message) {

return hydrate(message)

.then(handleMessage)

.catch((error) => {

console.log(`asyncFunction: Error - ${error}`);

});

};

function handleMessage (message) {

// Do Stuff

return {};

}

With these two functions (and with Stackery taking care of the tedious setup such as the IAM policies to allow functions to access the S3 bucket) it's relatively straightforward to implement a robust solution to get around the AWS lambda payload limitations.

How Stackery Makes it Easier to Handle your serverless apps

Stackery lets you design your application as something much greater than the sum of its parts. Serverless functions (AWS Lambdas) need gateways to communicate with the outside world, and databases and storage to store user information. Stackery lets you create your app with all the resources you need in a single canvas, deploy the same application to different AWS regions, and move between multiple AWS accounts.

Related posts

How to redrive your SQS messages to success

Use Machine Learning to detect objects in an image