Rate Limiting Serverless Apps - Two Patterns

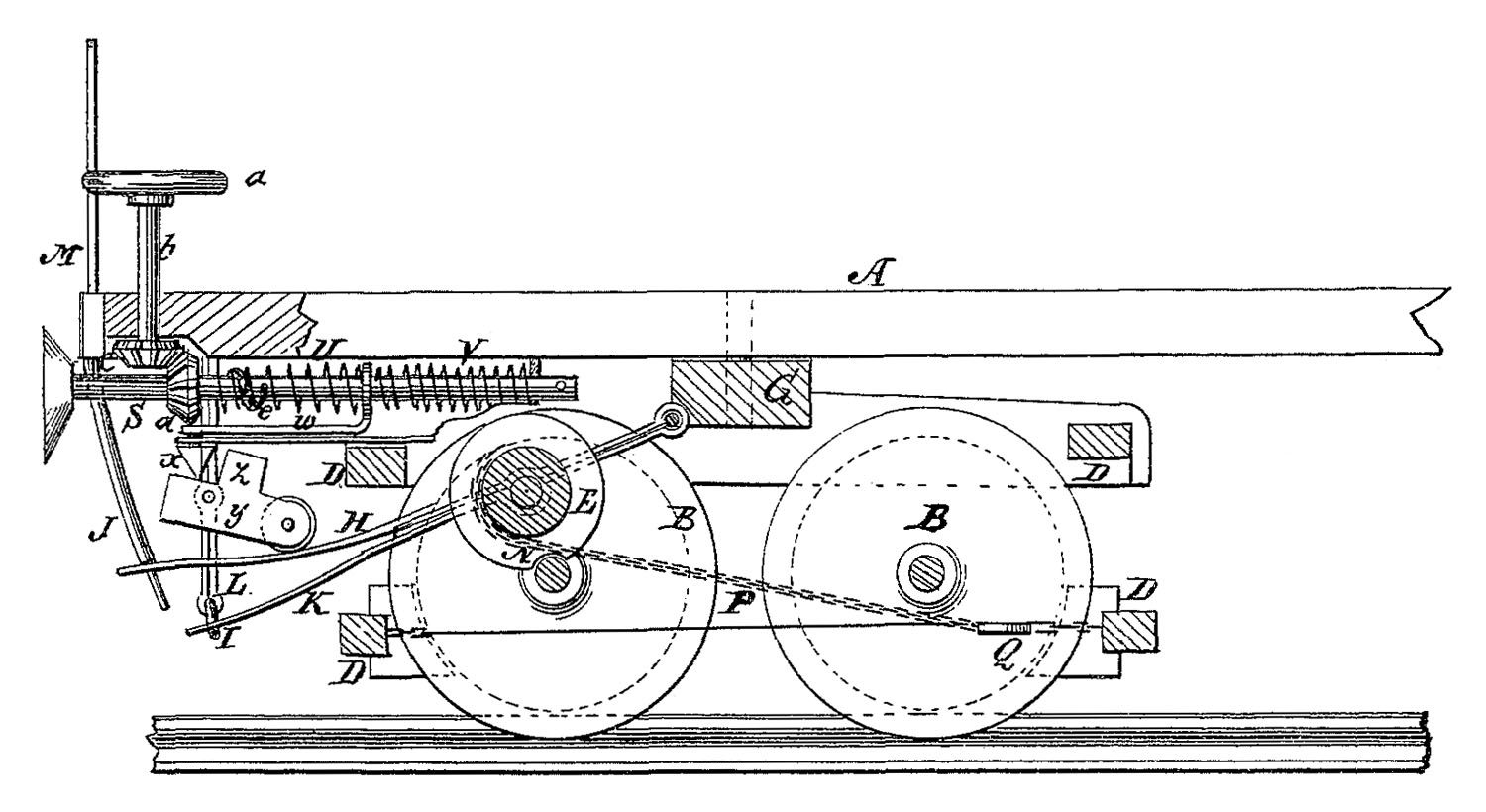

Diagram for an automatic brake patented by Luther Adams in 1873.

Diagram for an automatic brake patented by Luther Adams in 1873.

Many applications require rate-limiting and concurrency-control mechanisms and this is especially true when building serverless applications using FaaS components such as AWS Lambda. The scalability of serverless applications can lead them to quickly overwhelm other systems they interact with. For example, a serverless application that fetches data from an external API may overload that system or hit API usage limits enforced by the API provider. Steps must be taken to control the rate at which requests are made. AWS Lambda doesn't provide any built-in mechanism for rate-limiting Lambda functions, so it's necessary for engineers to design and implement these controls within their serverless application stack.

In this post I'll discuss two patterns I've found particularly useful when building applications with rate-limiting requirements in a serverless architecture. I'll walk through how each pattern can be used to control the load serverless applications generate against external resources, and walk through how to combine them in an example application to limit the rate at which it performs work.

Pattern 1: The Queue Filler

The Queue Filler moves work items into a worker queue at a configurable rate. For example a serverless application that fetches data through an external HTTPS API may be limited to making 600 requests per minute, but need to make a total of 6000 requests to access all necessary data. This can be achieved by triggering a Queue Filler function each minute which moves 600 items from a list of unfetched API URLs into a queue for Worker functions. By controlling the rate at which items are placed into the Worker Queue we limit the rate at which our application makes requests against the external API. There's a wide variety of technologies, such as Kinesis streams, Redis, SQS, or DynamoDB that can be used as the queue backend.

Pattern 2: Spawn N Workers

The Spawn N Workers pattern is used to control how many Workers functions are triggered each minute. If, for example, the Worker function makes 1 request against an external API we can invoke 600 Workers functions per minute to achieve a throughput of 600 requests per minute. This pattern is also useful for controlling the concurrency of Workers which may have performance impact on shared resources such as databases.

Example Rate Limited Application

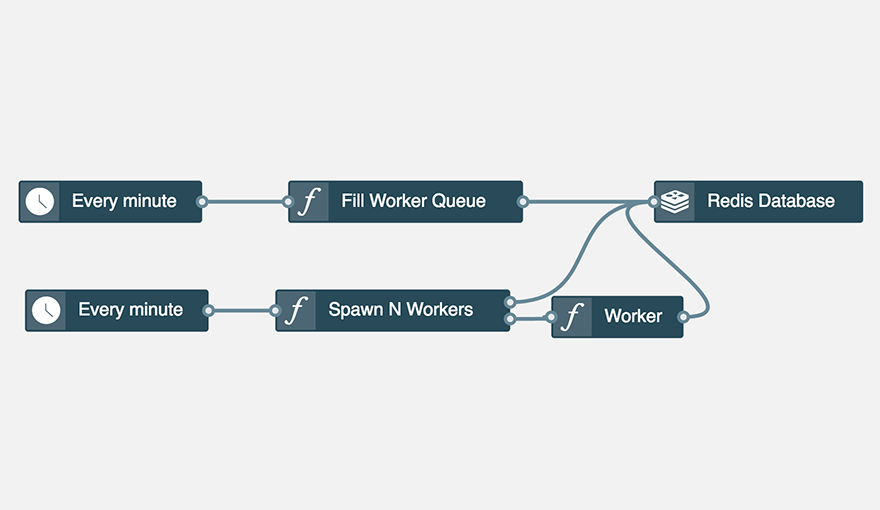

This diagram shows an example serverless architecture that implements the Queue Filler and Spawn N Workers patterns to control the load generated by Worker functions. The architecture consists of 2 Timers, 3 Functions, and a Redis ElasticCache Cache Cluster which is used to store rate limit state. Each minute a timer triggers the Fill Worker Queue function which implements the Queue Filler pattern by moving items from one Redis Set to another. Another timer triggers the Spawn N Workers function which triggers Worker function invocations based on a config value. This allows us to control the concurrency of Worker requests, and provides a convenient operational safety valve. We can turn the workers off by setting the spawn count to 0.

Fill Worker Queue Function

This function gets invoked once per minute and moves 600 queued items from the unqueuedItems Redis Set into the workerQueue Set. In some cases it's desireable to fetch the numberToQueue value from Redis (or another data store) so it can be configured dynamically at runtime.

const stackery = require('stackery');

const Redis = require('ioredis');

// Setup a new redis client and connect.

const redis = new Redis(process.env['REDIS_PORT'], process.env['REDIS_DOMAIN']);

const unqueuedItemsKey = 'queue:unqueuedItems';

const queuedItemsKey = 'queue:workerQueue';

// How many items should be queued for Workers each minute.

// Usually it's better to fetch this number from Redis so you can

// control rate limits dynamically at runtime.

const numberToQueue = 600;

module.exports = function handler (message) {

// move 600 items from the unqueued list to the queued list

return redis.spop(unqueuedItemsKey, numberToQueue).then((items) => {

redis.sadd(queuedItemsKey, items);

}).then(() => {

console.log(`Successfully queued ${numberToQueue} items`);

});

};

Spawn N Workers Function

This function gets invoked once per minute. It fetches a configuration value from Redis, which controls how many Worker functions it invokes. By storing this value in Redis we're able to configure the Worker spawn rate dynamically, without redeploying. This function uses the stackery.output API call to trigger the Worker functions. The {waitFor: 'TRANSMISSION'} options means this function will exit after all Workers have been successfully triggered, rather than waiting until they've completed their work and returned.

const stackery = require('stackery');

const Redis = require('ioredis');

// Setup a new redis client and connect.

const redis = new Redis(process.env['REDIS_PORT'], process.env['REDIS_DOMAIN']);

// Redis key that stores how many worker functions should be spawned each

// minute.

const numberOfChildrenRedisKey = 'config:Concurrency';

module.exports = function handler (message) {

// Fetch the configuration value of how many worker functions to spawn.

return redis.get(numberOfChildrenRedisKey).then(numberOfChildren => {

console.log(`Triggering ${numberOfChildren} functions.`);

const promises = [];

for (var i = 0; i < numberOfChildren; i++) {

// Here we use stackery's output function to trigger downstream worker

// functions.

promises.push(stackery.output({}, { waitFor: 'TRANSMISSION' }));

}

return Promise.all(promises);

}).then(() => {

console.log('Triggered successfully.');

});

};

Worker Function

Worker functions pull a work item off the worker queue and process it. It's necessary to use an atomic locking mechnism to avoid multiple workers locking the same work item. In this example the Redis SPOP command is used for this purpose. It removes and returns a random item from the queue, or null if the queue is empty. If you want to track Worker failures for retrys or analysis you can use Redis SMOVE to move locked items to a locked Set and remove them on success.

const Redis = require('ioredis');

const redis = new Redis(process.env['REDIS_PORT'], process.env['REDIS_DOMAIN']);

const queuedItemsKey = 'queue:workerQueue';

module.exports = function handler (message) {

// Use SPOP to atomically pull an item off the worker queue.

return redis.spop(queuedItemsKey).then((item) => {

// If we don't get an item fail.

if (!item) {

throw new Error("Couldn't acquire lock.");

}

console.log(`got lock for ${item}`);

return item;

}).then((item) => {

// Now that we've pulled a item from the worker queue we do our work.

return doWork(item);

});

};

Building Ergonomic Controls

As you incorporate rate-limiting mechanisms into your application it's important to consider how you expose the rate limit configuration values to yourself and other engineers on your team. These configuration values can provide a powerful operational tool, giving you the ability to scale your serverless application up and down on demand. Make sure you think through the ergonomics of your control points in various operational scenarios.

I've found that keeping configuration in a datastore which your application accesses is ideal. This allows you to dynamically configure the system, without redeploying, which is useful in a wide variety of situations. It provides DevOps engineers with the ability to quickly turn off functionality if problems are discovered or to gradually scale up load to avoid overwhelming shared resources. In advanced cases, teams can configure an application to dynamically respond to external indicators, for example decreasing load if an external API slows down or becomes disabled. Patterns like Queue Filler and Spawn N Workers are useful when determining how to build these core operational capabilities into servereless applications.