Announcing Stackery-Native Provisioned Concurrency Support

The seamless scaling and headache-free reliability of a serverless application architecture has become compelling to a broad community of cloud specialists. But for those who have yet to become converts, a specific issue related to service startup latency—Cold Starts—has been one of the cited key objections.

Fortunately, the serverless marketplace is maturing. As profiled in my recent post, there’s a whole host of things that used to be a challenge that we no longer have to worry about in 2020 and beyond. The serverless approach — particularly when viewed through the lens of a tool like Stackery — is delivering on simplicity and business focus rather than looking like a science experiment.

The Cold Start issue is simple in nature but nonetheless was previously intractable. Any time AWS Lambdas needed to scale up, the first invocation of each service instance took longer to handle its request than those that followed. There hasn’t been a proper solution up until now, only pragmatic work-arounds that require a certain amount of system “brain surgery.” For example:

- Rewriting the functions into languages with less start time

- Sending ‘warm up’ requests to your Lambda before big traffic spikes

- Giving more resources to Lambdas

- Re-engineering your Lambdas to start up faster (e.g. by minimizing dependencies)

All of these solutions add overhead and generally move away from the easy, automatic ethos of serverless as a platform. As of December 2019, these tedious homebrew solutions have become no longer necessary, since Provisioned Concurrency from AWS can effectively eliminate cold starts with just a simple configuration adjustment.

We first wrote about the Provisioned Concurrency feature for AWS Lambda, effectively ending the threat of cold starts on AWS Lambda. The excellent Yan Cui wrote a full writeup on Provisioned Concurrency’s features, and how it’s really a game-changer for large applications with extreme, predictable spikes in usage.

While Provisioned Concurrency is a really neat AWS feature, it does add some friction to the management of Lambda functions. Even though it’s easier than the homebrew solutions listed above, you still need to configure provisioned concurrency. Since Stackery is a tool to make serverless configuration easier, we naturally wanted to add provisioned concurrency.

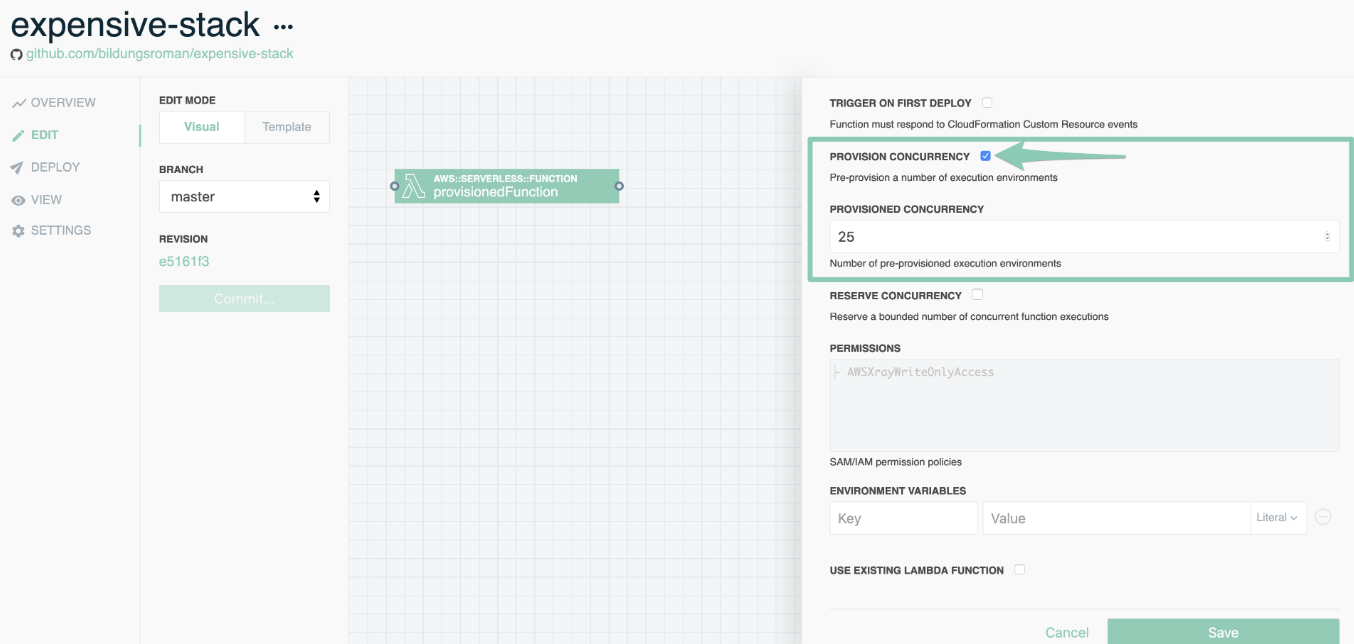

Starting today, Stackery supports Provisioned Concurrency right within our Lambda management tools.

Lambda functions can now be set to have Provisioned Concurrency right in their settings panel within the Stackery Editor. Likewise, the number of execution environments to keep “hot” for incoming requests can be easily preconfigured.

Some important points to remember as you try this new feature:

- Provisioned Concurrency isn’t without cost. A new idle charge of $0.015 per GB-hour, means that a 100 provisioned execution environments at 256 MB will cost $270 a month. Keep in mind that the costs of Lambda functions are rarely significant for production-level traffic, but may not be for you if your team is only experimenting with settings.

- Provisioned Concurrency will count toward the max number of Lambda instances you can have running within a region. Provisioned Concurrency comes out of your regional concurrency limit. The solution for critical Lambda functions is to reserve a number of instances that will always be available. They can be configured from the Stackery editor, right under the Provisioned Concurrency settings, giving you full control over your instances expenditure.

Of course, you can also do all this slick editing from within Stackery’s VSCode plugin:

Stackery is a serverless platform that helps software teams build, manage, and deliver secure and consistent serverless applications with speed, consistency, and expertise. With our environment management tools, you can take your serverless app from test, to staging, to production without having to manually deal with the details of environmental or operational configurations.

When the inventor of serverless tried using Stackery, his time from concept to prototype got 60x faster. With the Stackery Visual Editor, you can create and deploy a complex and serverless stack in minutes instead of hours.

Sign up below for our weekly roundup to get even more info on the serverless landscape!