AWS Lambda Cost Optimization

Serverless application architectures put a heavy emphasis on pay-per-use billing models. In this post I'll look at the characteristics of pay-per-use vs. other billing models and discuss how to approach optimizing your AWS lambda usage for optimal cost/performance tradeoffs.

How Do You Want To Pay For That?

There are basically three ways to pay for your infrastructure.

- Purchase hardware up front. You install it in a datacenter and use it until it breaks or you replace it with newer hardware. This is the oldest method for managing capacity and the least flexible. IT procurement and provisioning cycles are generally measured in weeks, if not months, and as a result it's necessary to provision capacity well ahead of actual need. It's common for servers provisioned into these environments to use 15% of their capacity or less, meaning most capacity is sitting idle most of the time.

- Pay-to-provision. You provision infrastructure using a cloud provider's pay-to-provision Infrastructure as a Service (IaaS). This approach eliminates the long procurement and provisioning cycles since new servers can be spun up at the push of a button. However it's still necessary to provision enough capacity to handle peak load, meaning it's typical to have an (often large) buffer of capacity sitting idle, waiting for the next traffic spike. It's common to see infrastructure provisioned with this approach with an average utilization in the 30-60% range.

- Pay-per-use. This is the most recent infrastructure billing model and it's closely tied to the rise of serverless architectures. Functions as a Service (FaaS) compute services such as AWS Lambda and Azure Functions bill you only for the time your code is running and scale automatically to handle incoming traffic. As a result it's possible to build systems that handle large spikes in load, without having a buffer of idle capacity. This billing model is gaining popularity since it aligns costs closely with usage and it's being applied to an increasing variety of services like databases (both SQL and NoSQL) and Docker-based services.

Approaching AWS Cost Optimization

There's a few things that are important to note before we get into how to optimize your AWS lambda costs.

- AWS Lambda allows you to choose the amount of memory you want for your function from 128MB to 3GB.

- Based on the memory setting you choose, a proportional amount of CPU and other resources are allocated.

- Billing is based on GB-SECONDS consumed, meaning a 256MB function invocation that runs for 100ms will cost twice as much as a 128MB function invocation that runs for 100ms.

- For billing purposes the function duration is rounded up to the nearest 100ms. A 128MB function that runs for 50ms will cost the same amount as one that runs for 100ms.

There's also a few questions you should ask yourself before diving into Lambda cost optimization:

- What percentage of my total infrastructure costs is AWS Lambda? In nearly every serverless application FaaS components integrate with resources like databases, queueing systems, and/or virtual networks, and often are a fraction of the overall costs. It may not be worth spending cycles optimizing Lambda costs if they're a small percentage of your total.

- What are the performance requirements of my system? Changing your functions memory setttings can have a significant impact on cold start time and overall run time. If parts of your system have low latency requirements you'll want to avoid changes that degrade performance in favor of lower costs.

- Which functions are run most frequently? Since the cost of a single Lambda invocation is insanely low, it makes sense to focus cost optimization on functions with monthly invocation counts in hundreds of thousands or millions.

AWS Lambda Cost Optimization Metrics

Now let's look at the two primary metrics you'll use when optimizing Lambda cost.

Allocated Memory Utilization

Each time a Lambda function is invoked two memory related values are printed to CloudWatch logs. These are labeled Memory Size and Max Memory Used. Memory size is the function's memory setting (which also controls allocation of CPU resources). Max Memory Used is how much memory was actually used during function invocation. It may make sense to write a Lambda function that parses these value out of Cloudwatch logs, calculates the percentage of allocated memory used. Watching this metric you can decrease memory allocation on functions that are overprovisioned, and watch for increasing memory use that may indicate functions becoming underallocated.

Billed Duration Utilization

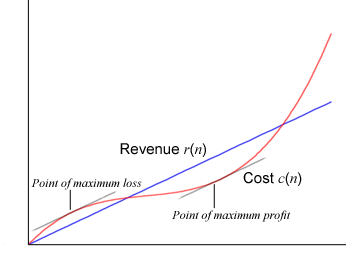

It's important to remember that AWS Lambda usage is billed in 100ms intervals. Like Memory usage, Duration and Billed Duration are logged into Cloudwatch after each function invocation, and these can be used to calculate a metric representing the percentage of billed time for which your functions were running. While 100ms billing intervals are granular compared to most pay-to-provision services there can still be major cost implications to watch out for. Take, for example, a 1GB function that generally runs in 10ms. Each invocation of this function will be billed as if it takes 100ms, a 10x difference in cost! In this case it may make sense to decreae the memory setting of this function, so it's runtime is closer to 100ms with significantly lower costs. An alternative approach is to rewrite the function to perform more work per invocation (in use cases where this is possible), for example processing multiple items from a queue instead of one, to increase Billed Duration Utilization.

Conversely there are cases where increasing the memory setting can result in lower costs and better performance. Take as an example a 1Gb function that runs in 110ms. This will be billed as 200ms. Increasing the memory setting (which also controls CPU resources) slightly may allow the function to execute under 100ms, which will decrease the billing duration by 50%, and result in lower costs.

The New Cost Optimization

The pay-per-use billing model significantly changes the relationship between application code and infrastructure costs, and in many ways enforces a DevOps approach to managing these concerns. Instead of provisioning for peak load, plus a buffer, infrastructure is provisioned on demand and billed based on application performance characteristics. In general this dramatically simplifies the process of tracking utilization and optimizing costs, but it also transforms this concern. Instead of using a pool of servers and monitoring resource utilization it becomes necessary to track application level metrics like invocation duration and memory utilization in order to fully understand and optimize costs. Traditional application performances metrics like response time, batch size, and memory utilization now have direct cost implications and can be used as levers to control infrastructure costs. This is yet another example of where serverless technologies are driving the convergence of developmental and operational concerns. In the serverless world the infrastucture costs and application performance and behavior become highly coupled.

Related posts