Filter by Category

All Posts

Popular Posts

- Team SuccessServerless for the Enterprise

- EngineeringGoing Serverless: Best Practices

- EngineeringDangers of Console-Driven Development

- EngineeringSimple Authentication with AWS Cognito

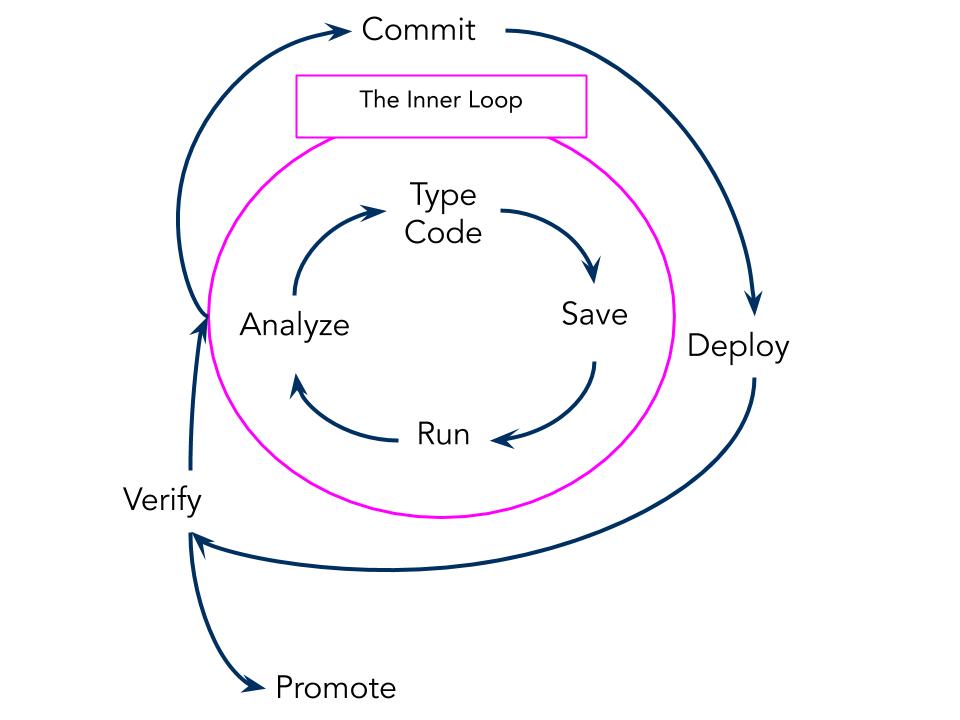

- EngineeringHow to do Serverless Local Development