Why All The Monolithic Serverless API Hate?

A schism exists in serverless land. There are about equal numbers of people on two sides of an important architectural question: Should your APIs be backed by a monolithic function or by independent functions for each endpoint? To the serverless ecosystem’s credit, this schism hasn’t devolved into warring factions.

What fights are like in the serverless ecosystem

That said, some have rationalized splitting API functionality up into independent functions with arguments that boil down to a combination of the following:

- We can now split functionality into nano-services like never before, so why not?

- Justifications on how independent functions are actually easier to track, even though we all probably agree that most tools to track the explosion of serverless resources are still lacking in maturity*

- Reasons why monolithic serverless functions are bad based on code architecture preferences or long cold start times due to inefficient implementations

(*Shameless plug for how Stackery can help with this)

Because the first two arguments are fairly weak, the main justification for APIs backed by independent functions are predicated on perceived problems with monolithic APIs more than why APIs backed by independent functions are better. I’ve yet to see a good argument for why all APIs should be broken up into independent functions. Just because it's possible for monolithic architectures to have problems doesn't prove that swinging to the opposite extreme is ideal.

There are certainly limits to the monolithic approach, but these limits are more due to Conway’s law than technical architecture. Conway’s law suggests that a reasonable approach would be to split an API up into independent functions when there are separate teams managing different parts of the API.

Some worry that cold start times of a monolithic function may be worse due to needing to initialize all the components of the API at once. However, in every language there are reasonable strategies for reducing cold start time. For example, both Node.js and Python make lazy-loading of functionality easy, while Go has low cold start times simply because it’s a fully compiled executable.

This naturally leads to a broader discussion of the effect of function architecture on cold starts. Thankfully, most API use cases are forgiving of cold start latency, but it's not something that can be ignored entirely. For example, we are all aware of the famous studies showing how latency can have an extraordinary impact on revenue for e-commerce. In general, almost everyone building a public serverless API should be monitoring for cold starts in some form.

Yan Cui (aka The Burning Monk and all around brilliant developer) recently wrote that monolithic serverless functions won’t help with cold starts. He makes a valid point that at scale, cold starts become noise. It’s true as well that we will never get rid of cold starts without help from the service providers. But the main thrust of his argument is that you will have the same number of cold starts whether you use a monolithic function or independent functions.

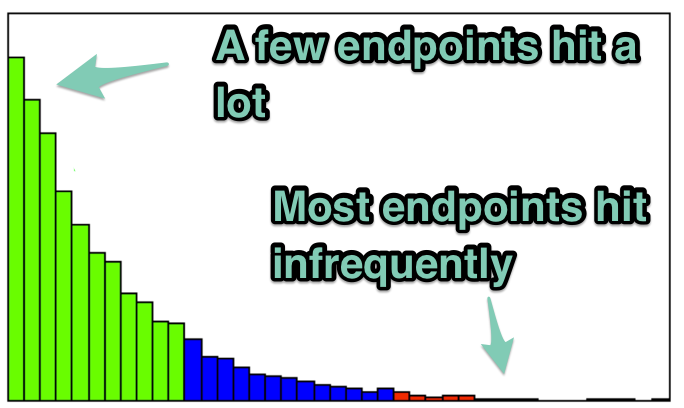

However, there is one incorrect assumption underlying the argument. Yan puts forward an API where every endpoint is hit with the same amount of traffic to analyze the effects of cold starts. In reality, almost no APIs have uniform traffic across all endpoints. Most API endpoint traffic follows a pattern similar to the natural power law. A few endpoints will have a high amount of traffic, but most will have much less. A few endpoints will have very little traffic.

When your API is backed by one monolithic function, the cold starts are spread out among all API requests in proportion to their throughput. In other words, the percentage of requests that trigger a cold start will be the same for all endpoints.

Now let’s examine the implications for APIs backed by independent functions. Imagine you have an endpoint that is hit with 1000 requests per hour, and one that is hit with 5 requests per hour. Depending on the cold start rate for your function, you may find that while you have very few cold starts for the high throughput endpoint, almost every request to the low-throughput function causes a cold start.

Maybe it is ok for your API to have different rates of cold starts per endpoint. But for many APIs this is problematic. Imagine your API has endpoints for both listing items and creating items, where listings are requested much more frequently than item creation requests are. You may have a service-level agreement on latency to be met by each endpoint. It would be better to spread cold starts across all endpoints in this scenario.

While it’s possible to use triggers to keep functions warm, if you have one monolithic function it is much easier to keep it warm than it is to keep many independent functions warm. And, contrary to popular belief, there are meaningful ways to warm functions even at high throughputs, though I’ll leave that discussion for another post.

All architectural choices come with trade offs. But the choice between monolithic and independent API functions is a false dichotomy. There's actually a broad spectrum between all functionality held in a single monolithic function and every three-line helper deployed as a separate microservice. Neither of these is desirable in all cases, which is why the arguments against one or the other are often weak or nonsensical. What folks should be doing is considering how they determine the appropriate boundaries for their API components, and how they manage that over time as their total lines of code, architectural complexity, and number of people involved grows.

Related posts